Deep learning is a powerful branch of artificial intelligence (AI) that is transforming the way machines process and understand data. As a subset of machine learning, deep learning uses neural networks to analyze and interpret complex patterns in data, making it a crucial technology for tasks like image recognition, natural language processing, and speech recognition. Unlike traditional algorithms, deep learning algorithms are capable of learning features directly from data, leading to more accurate predictions and insights.

If you’re interested in building intelligent systems that can recognize images, translate languages, or even drive cars, then this deep learning tutorial is the perfect place to start. In this comprehensive guide, you’ll learn the fundamentals of deep learning, explore real-world applications, and follow along with hands-on Python code examples to solidify your understanding.

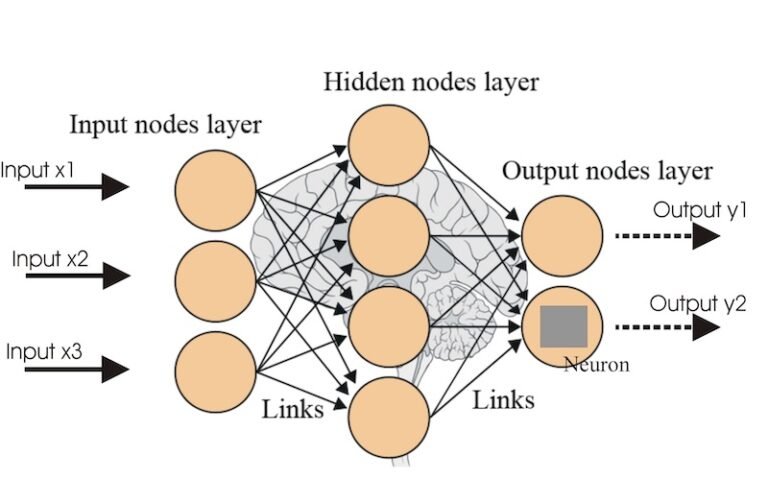

A neural network is the building block of deep learning. It is composed of layers of interconnected nodes, or “neurons,” which mimic the human brain’s functionality. Neural networks are designed to recognize patterns in data by adjusting their internal parameters during training.

Neural networks allow models to learn and predict complex patterns from raw data, which traditional machine learning algorithms struggle to do.

from keras.models import Sequential

from keras.layers import Dense

# Create a simple neural network with one hidden layer

model = Sequential()

model.add(Dense(8, input_dim=4, activation='relu'))

model.add(Dense(1, activation='sigmoid'))

# Compile the model

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

Backpropagation enables neural networks to learn from data, which is crucial for making accurate predictions.

In deep learning, image processing refers to the use of algorithms and techniques to manipulate and analyse images in a way that a machine learning model can understand. Neural networks, especially Convolutional Neural Networks (CNNs), are highly effective for tasks such as image classification, object detection, and segmentation.

With the rise of computer vision applications, understanding how to process and manipulate images for model training has become essential.

from keras.preprocessing import image

import numpy as np

# Load an image from file

img = image.load_img('example_image.jpg', target_size=(150, 150))

# Convert the image to a numpy array

img_array = image.img_to_array(img)

# Normalize the image data to the range [0, 1]

img_array /= 255.0

print(img_array.shape) # Check the shape of the processed image

Transfer learning involves taking a pre-trained model (usually trained on a large dataset like ImageNet) and adapting it to a new task with relatively smaller datasets. Instead of training a model from scratch, we can fine-tune an existing model to perform well on new data.

Transfer learning enables faster training and often improves performance, especially when working with limited data.

from keras.applications import VGG16

# Load the pre-trained VGG16 model

base_model = VGG16(weights='imagenet', include_top=False, input_shape=(64, 64, 3))

# Freeze the layers of the base model

for layer in base_model.layers:

layer.trainable = False

# Add new layers for your specific task

model = Sequential([

base_model,

Flatten(),

Dense(128, activation='relu'),

Dense(1, activation='sigmoid')

])

# Compile the model

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

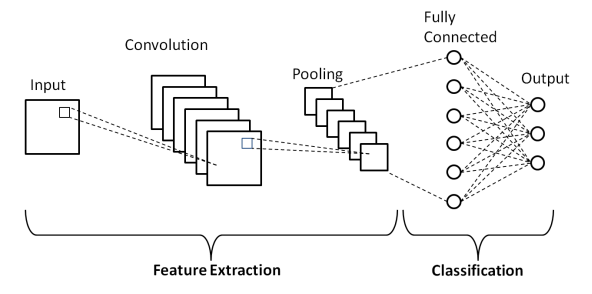

CNNs are a specialised type of neural network designed for image-related tasks. They use convolutional layers to automatically detect features in images, such as edges, textures, and objects, by applying filters to the input data.

CNNs have revolutionised computer vision tasks, enabling machines to achieve superhuman performance in image recognition.

from keras.models import Sequential

from keras.layers import Conv2D, MaxPooling2D, Flatten, Dense

# Create a CNN model

model = Sequential()

model.add(Conv2D(32, (3, 3), activation='relu', input_shape=(64, 64, 3)))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Flatten())

model.add(Dense(128, activation='relu'))

model.add(Dense(1, activation='sigmoid'))

# Compile the CNN model

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

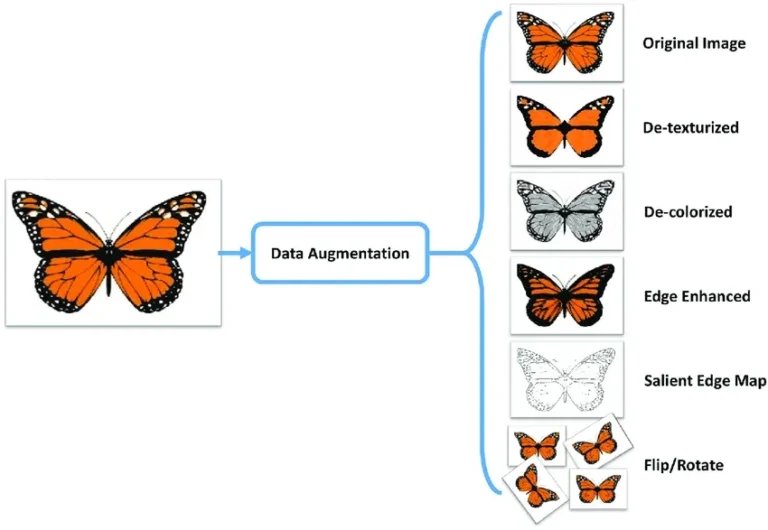

Image augmentation involves creating modified versions of images in a dataset, such as rotating, flipping, or zooming, to artificially increase the size of the training data. This technique is especially helpful in avoiding overfitting when working with small datasets.

Augmenting images allows the model to learn from a wider variety of examples, improving generalisation and performance.

from keras.preprocessing.image import ImageDataGenerator

# Create an ImageDataGenerator object with augmentation settings

datagen = ImageDataGenerator(rotation_range=40, width_shift_range=0.2, height_shift_range=0.2, shear_range=0.2, zoom_range=0.2, horizontal_flip=True)

# Fit the generator on the image dataset

datagen.fit(X_train) # Assuming X_train contains training images

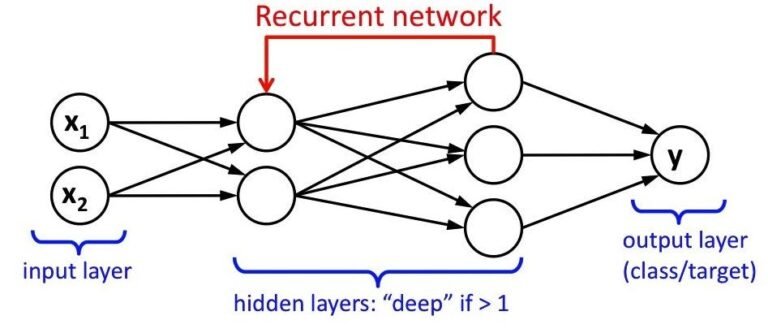

RNNs are specialised neural networks designed to handle sequential data, such as time series, text, and audio. Unlike traditional neural networks, RNNs have connections that form loops, enabling them to retain information from previous inputs, making them highly effective for tasks like language translation and speech recognition.

RNNs are crucial for handling temporal data, where the order of the input matters.

from keras.models import Sequential

from keras.layers import SimpleRNN, Dense

# Create a simple RNN model

model = Sequential()

model.add(SimpleRNN(50, input_shape=(10, 1)))

model.add(Dense(1))

# Compile the RNN model

model.compile(optimizer='adam', loss='mean_squared_error')

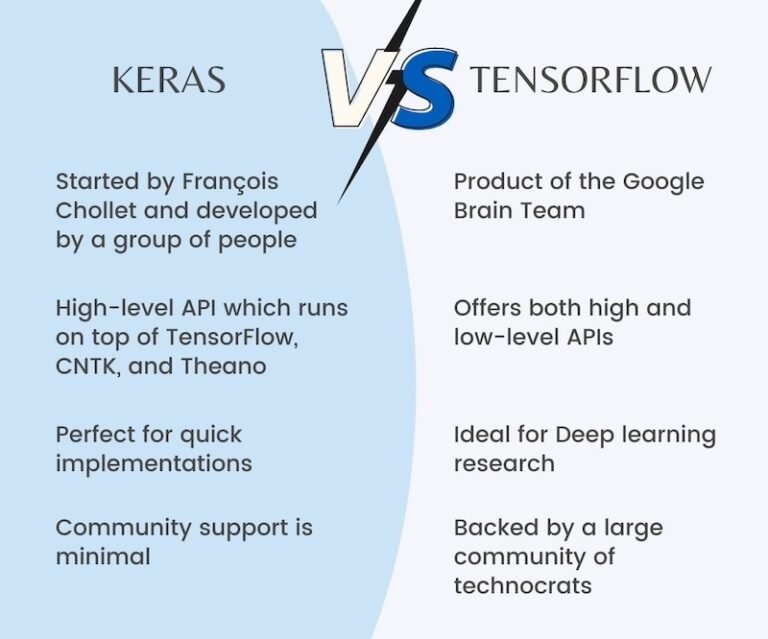

TensorFlow and Keras are two popular libraries for building and training deep learning models. While TensorFlow is a low-level library offering flexibility, Keras acts as a high-level API that simplifies model creation and training. TensorFlow powers Keras, allowing you to quickly prototype models with ease and then scale them for production using TensorFlow’s capabilities.

Keras provides an easy-to-use interface for building deep learning models, while TensorFlow ensures scalability and performance for larger projects.

import tensorflow as tf

from tensorflow.keras import layers

# Build a simple model using TensorFlow and Keras

model = tf.keras.Sequential([

layers.Dense(64, activation='relu', input_shape=(32,)),

layers.Dense(10, activation='softmax')

])

# Compile the model

model.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['accuracy'])

# Summary of the model

model.summary()

While this tutorial provides a solid foundation in deep learning and more to explore. At Netmax Technologies, we offer a comprehensive Deep Learning Course that dives deeper into these topics.

In our course, you’ll have access to:

Join us at Netmax Technologies and take the next step toward becoming an expert in deep learning.